Over the years I’ve had many computers that are now considered “devices”. The following are not so much reviews as a retrospective on what has changed and what hasn’t.

eMate 300

The oldest device in this photo. I used this in school for taking notes and writing. Easily the device with the longest useful battery life. Would go days without recharging with regular use. Charging was fast enough that I never really worried about if it was charged or not. Great keyboard, great form factor. Newton OS was great for note taking and writing. Networking was totally beyond it. Syncing was usually done via IRDA and was fast enough for text, just.

Visor Pro MIA

The kids found the charger, and the keyboard for my Visor Pro, and even a GPS module. The Visor Pro itself is missing. This little Palm OS device replaced the eMate. In every possible way it was worse. … except that it synced over USB and I could use it with a more modern desktop computer. The keyboard was a 4 fold Stowaway, I broke 2 in the year or two of use this saw. Still no networking ability at all. After just a month or two in college with this I gave up on the idea of a smaller device for note taking and bought a 12in Powerbook.

palmOne Treo

My first network enabled device. I had a phone module (refurbished) for the Visor, but it never really worked right. The web was totally unusable on the Treo, but it did email fine. Also used something to convert webpages, and feeds into palm doc files, would use that to read news and other things on the Treo. Keyboard wasn’t really usable for more then a quick response. Battery life was reasonably awful as well. This was the first device that basically required batter baby sitting from me.

Nokia Internet Tablet 770

Network enabled, but not cell enabled. First device that did mobile web browsing well enough to use. Browser was still very limited and frustrating. Email worked great, multi service IM worked great. Never saw daily use thanks to lack luster battery life, and WiFi only networking.

Nokia N810 Internet Tablet

Best build quality and form factor of any of the devices pictured. Slide out keyboard worked for long emails, short note taking, IM conversations, etc. Camera was horrible, shouldn’t have bothered with it. Used with a bluetooth paired smart phone for 3G internet access. Battery life of N810 was fine, battery life of any cell phone running as a network access point was horrible. Used while traveling, or in long meetings. Attempts to use as GPS device lead to the next item on the list…

TomTom GO720

Dedicated GPS device. Also very bad iPod, MP3 player. Provided decent GPS directions that kept us from getting lost while traveling and moving to a new state. Everything other then the GPS functionality was pointless. Battery life a joke, if the USB power adapter bumped lose on the rough roads around here usually turned off a few minutes later with no warning.

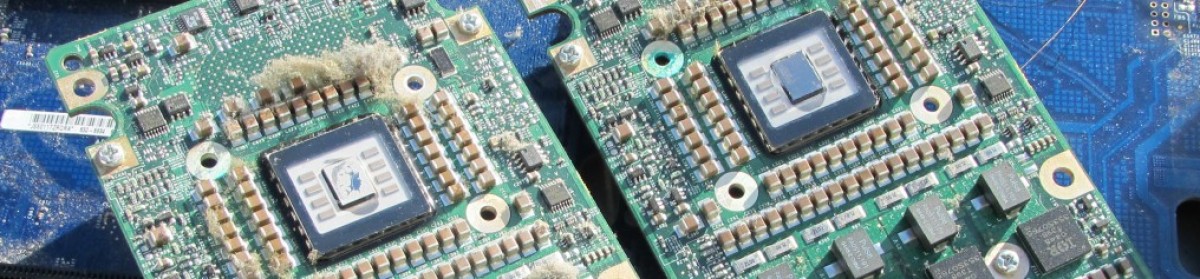

Pair of Nokia 6650 phones

My wife and I used these phones for a long time. Good battery life, good signal, working bluetooth pairing. Calendar syncing never really worked right. Total joke trying to use the web. Wife’s was replaced with an iPhone 4S, mine with the next device.

Google One

Android at this point didn’t really work. I had this phone and would trade of a SIM between it and the Nokia, I never managed more then a day or so without going back to the Nokia. Simple things like TAKING A PHONE CALL, would often fail for odd and confusing reasons. Build quality wise, this was the WORST of the list. Keyboard was worse to use then the Treo.

Samsung Galaxy S

Web browsing worked fine, email worked fine. Typing was about the level of the Treo for speed but I found I did type longer with the soft keyboard then the old hardware one. Anything longer then an email was still painful. GPS sort of worked, but was overcome by the devices spectacular failure. Battery Life, the Galaxy S could NOT make it a whole day of use no matter how much I tried to baby it. I eventually got a custom home rolled kernel with a number of patches and the rest of the CyanogenMod distribution to work, but even then it would limp home with maybe 4% battery left. God help you if you made the mistake of turning on the GPS. Contemplating using more then one battery ended the day Apple shipped enough iPhone 5’s to the San Francisco Apple Store.

iPhone 5

Taking the photo, thus not in the photo. Best mobile web browser ever (Chrome iOS). Best email ever (Gmail). Best camera in a device ever. Totally sucks for note taking. Typing is no better then it was on the Treo. Contact and calendar management has gone no where since the Newton. Battery life… well, it makes it a whole day so battery life is just barely good enough.

Raspberry Pi

The best little computer ever. For less then $150 you can have a complete computer (keyboard, mouse, power supply, monitor) running Debian Linux that fits in your palm. This is magical. You don’t need to write your own board support package for it, you don’t need to flash your own boot loader eproms, you don’t have to hack around in Forth to GET your CPU to start booting in the first place. The computer is fast enough you don’t have to setup a complex cross compiler tool chain to get started, you can just apt-get install gcc. For anyone who worked in the embedded space a decade ago this little box is magical.